I’m really happy and proud to say that since this story was first posted, I received great feedback and relay throughout the Elixir community! The last advice given in this article has indeed been taken as an inspiration by José Valim to add a test partitioning feature to Elixir 🤗

Some context

I have been using Elixir + Phoenix on a daily basis for 3 years now, and almost since day one, my team and I have set up continuous integration (CI) and continuous delivery (CD) processes.

Since we’re deploying our app on Heroku, we’ve been using:

- SemaphoreCI as our CI tool (because SemaphoreCI is available as a Heroku add-on, simple as that!)

- Heroku native pipelines to perform CD whenever CI is successful on our Git master branch

Although our CD process has always been very reliable, our CI process gradually started to under-perform, becoming both slower and unreliable. Like boiling frogs, we did not really pay attention until very recently when we had to wait almost for 1 hour before being able to deploy our code to staging: each CI run would take 15 minutes, and random test failures forced us to re-run each build a few times before getting the green light. This drove us really crazy, and led us to take action!

In this article, we’ll talk about the actions we took in order to improve an unreliable — randomly failing — 15 minutes long CI process to a solid and steady 3 minutes build.

#1 — Improve worst tests

First action is to find out the culprits: which tests are performing really slowly and which are failing randomly?

Running mix test --trace is very useful as it gives you detailed execution time, per test. But my favorite is mix test --slowest 10 which prints the list of the 10 worst performing tests. I have no general purpose advice to give about fixing these tests, but the slowest are usually quite easy to improve.

Regarding randomly failing tests, two things can really help:

- a simple bash loop to run a single test over and over. If it returns anything else than 0, the test is unreliable.

(for i in {1..10}; do mix test test/lib/my_app/features/my_browser_test.exs:10 > /dev/null; echo $?; done) | paste -s -d+ - | bc

- a

wait_untilfunction that helps to fix unreliable browser tests (we're using Hound with chromedriver). Here is the gist.

A last ugly trick 🙈 I am ashamed of and I urge you not to use is to give another chance to your failing tests! Running your tests with mix test || mix test --failed will automatically rerun any failed test and still give you a 0 exit code if it passes on the second time.

Pro tip: I strongly encourage you to read mix help test output: hidden gems in there!

#2 — Cache all things

At this stage, the build became way more reliable (99% success rate) but still really slow (~ 12 min).

My first attempt to improve this was to see if the grass was greener at GitHub’s with their brand new GitHub Actions, and if it would result in an improved build duration. Although promising, I did not manage to run any of our chromedriver tests with their product, and execution time was anyway quite similar.

I then decided to go back to Semaphore and migrate from the classic plan to their new SemaphoreCI 2.0. The new Semaphore (like GitHub Actions btw) requires you to describe your CI pipeline in a YAML file committed along your source code. In this YAML file you can run docker containers, arbitrary shell commands or specific semaphore commands, including the very useful cache commands.

By using cache store and cache restore at strategic locations you can make most of your builds way faster:

- by not re-downloading Elixir dependencies

- not re-compiling all Elixir code, only updated code

- not re-downloading NPM/Yarn dependencies

Pro tip: have a look at fail_fast and auto_cancel Semaphore settings. Real time savers!

#3 —Leverage on parallelism

Caching really helped, and at this step we could have stopped improving the process as our build time already went down to 6 minutes.

But reading SemaphoreCI documentation is insightful, and their concepts of blocks and jobs that can run either sequentially or in parallel suggest there is still room for improvement!

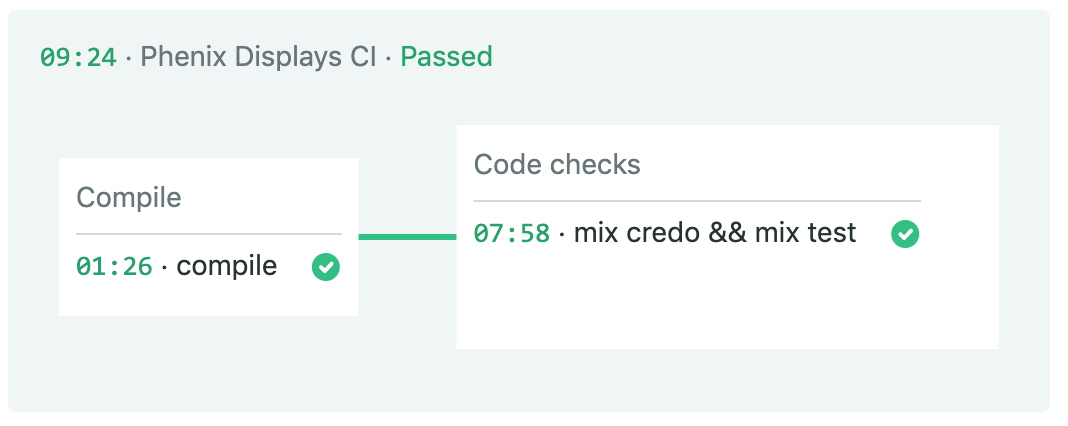

Our build was really straight-forward then:

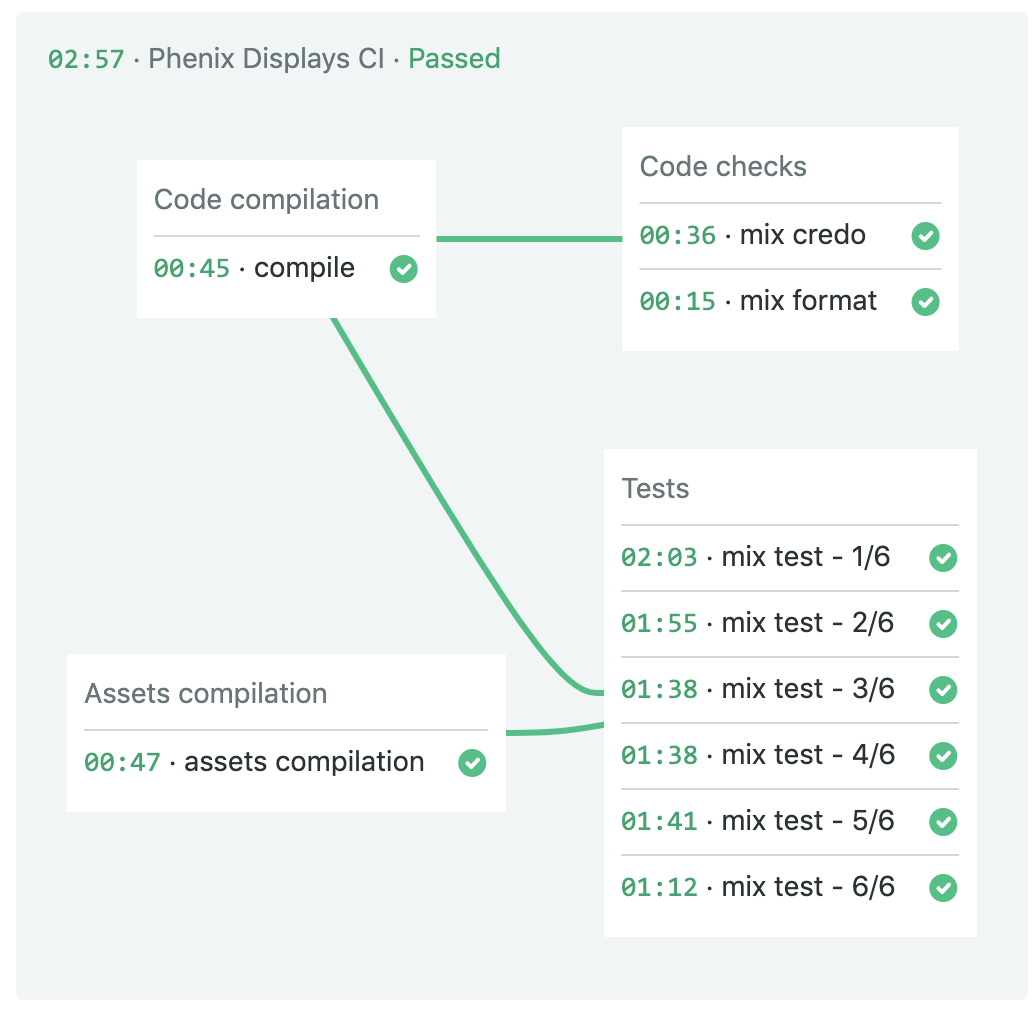

The first improvement is to run in parallel what can be done in parallel:

mix credo(the Elixir code analysis tool) andmix testcan be run at the same time. With a fail-fast strategy that stops running tests whenever credo fails, it can be a real time saver!- JS & CSS assets can be compiled at the same time than Elixir code: it can save up to one minute.

The final step is to split our tests in different batches to also run them in parallel. I wrote this custom test runner (which simply wraps mix test shell command) that splits all test files in N batches (by hashing their filename) and only runs a single batch out of N:

# Run all tests in 4 random batches

$> mix n_test 1 4

$> mix n_test 2 4

$> mix n_test 3 4

$> mix n_test 4 4

You can then leverage on SemaphoreCI parallelism to run a single task N times with an incremental index passed as an environment variable.

Our CI finally looks like this, running at a solid and steady 3mn pace!

Pro tip: save time at learning Semaphore by reading our build pipeline setup

Wrapping up

It has been known for years how important Continuous Integration is, for any size of software team. But it should be a source of trust, happiness and increased productivity, never a source of frustration!

So start paying attention, and maybe some of the guidelines in this post will help you at improving your CI build!

NB - I was not paid by SemaphoreCI to write this article, so feel free to experiment with any other CI software, as long as it offers you caching & parallelism.